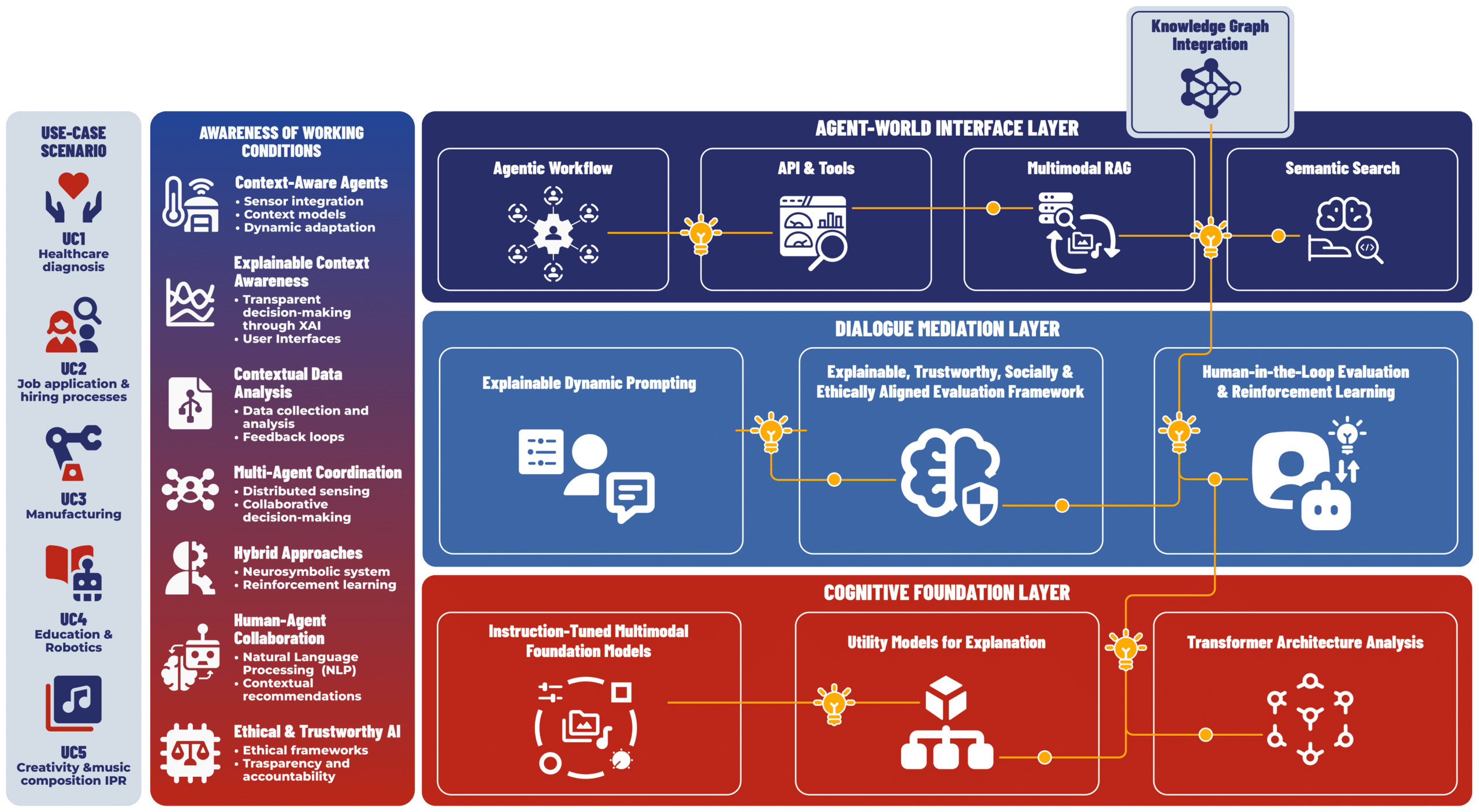

The AIXPERT Solution

AIXPERT aims to build robust, explainable, transparent, and autonomous AI systems centred on user needs, encapsulated in the concept of human-centred AI. The project develops a multi-layered framework that strengthens explainability, accountability, autonomy, and robustness in diverse environments.

Its core innovation is an AI-agentic backbone capable of reasoning about its actions, making decisions, and interacting naturally with users. This integrates machine learning, knowledge representation, and reasoning while ensuring ethical alignment and user trust.

The architecture is structured into three interconnected layers:

1. Agent-World Interface Layer

2. Dialogue Mediation Layer

manages user and agent communication through advanced prompting, model evaluation, and human-in-the-loop workflows to improve explainability.

3. Cognitive Foundation Layer

provides base capabilities through foundation models, supporting alignment and interpretability via instruction sets, auxiliary models, and circuit-level analysis.

Within these layers, the system implements key components:

- Agent Profiling – defines agent roles, functions, and documentation.

- Agent Memory – combines short-term and long-term storage (e.g., RAG with vector databases) to ensure consistency.

- Agent Actions – translate decisions into tool interactions with structured outputs.

- Agent Planning – supports reasoning strategies (Chain of Thought (CoT), Tree of Though (ToT), Graph of Thought (GoT), Algorithm of Thought (AoT)) for complex problem-solving.

- Agent Orchestration – coordinates multiple agents, ensuring collaboration, task allocation, and conflict resolution.

Together, these mechanisms create a transparent, multi-agent AI system designed to foster trust, usability, and dynamic interaction with the real world.